👋HI, NICE TO MEET YOU

My name is Abdelkrim Alfalah. I live in SE London, UK. I'm a happy husband and father.

Fortunate enough to have experienced diverse cultures and contexts. I strongly believe that innovation's magic operates at a crossroads between various disciplines. It materialises when we assimilate patterns and frameworks from one context and implement them unexpectedly into others.

Main >>

CPO @t FACT360

CPO @t FACT360At present, my focus is on FACT360, where I work as Chief Product Officer (CPO), alongside a dedicated team of salespeople and data scientists.FACT360 is committed to becoming the best Behavioural Analytics solution for The Insider Threat & Forensics markets.I help shape and drive the product, ensuring it delivers value to our portfolio of clients and partners.

ProductNumbers Newsletter

In addition, I run a newsletter 📰 teaching the subject of product analytics 🎁📈 & experimentation 🔬. The mission of this newsletter is to help aspiring Product Analysts to learn and join this fascinating industry.This includes inspiring products, cool ideas, people to follow, news to read, tools to try, jobs, and other delicious spam. If you're into that stuff, subscribe to the newsletter!

Other Projects

I love nothing more than working on a new idea. Come and see my successful projects and my ideas garden & graveyard.

Experiments >>

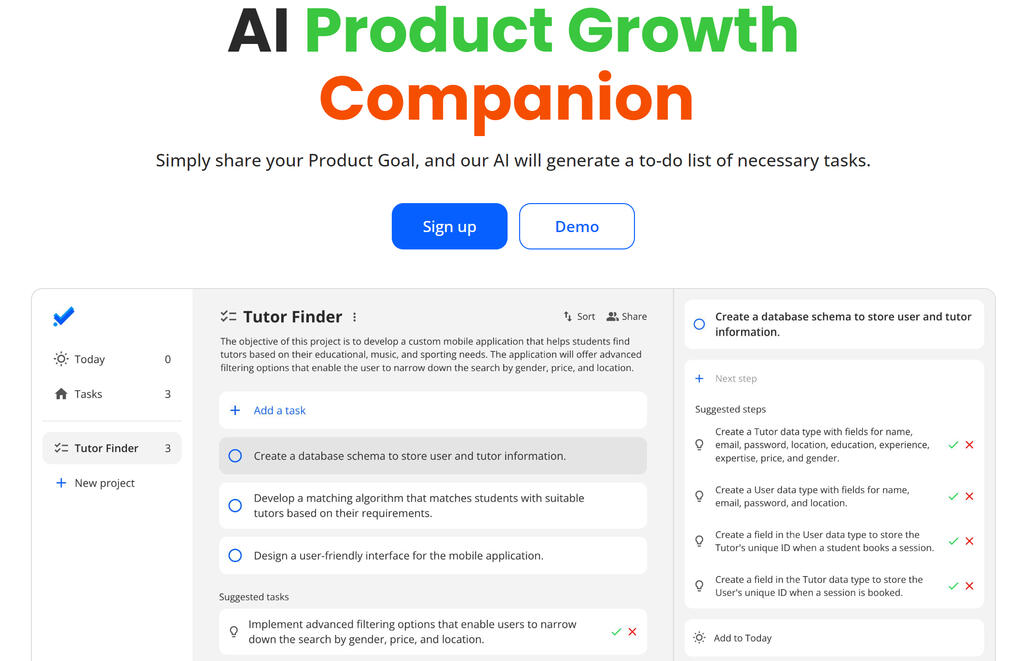

Product Companion

AI Product Growth Companion.Simply share your Product Goal, and our AI will generate a to-do list of necessary tasks.

ProductQuiz

As a great believer in the power of questions. I formulated in this quiz the questions that allows to gain clarity on your Product.

Educational Writing >>

Online Course for UoL

Co-built this course on Discrete mathematics during my PhD studies @t Goldsmiths University of London.This course is taught as part of BSc Computer Science. A great opportunity to learn Sets, combinatorics, functions, and graph theory.

Articles & Posts

Custom Websites // Carrd Templates // Tutorials

Get In Touch With Me!

........

Forms are boring, so I've kept it short. Don't forget to leave your name!